HCF EP 004: Indexing and Querying

2024.12.12

This is Episode 4 of HandCraftedForum.

Last time I showed how to persist simple user account data to the database. This time I want to demonstrate indexing and querying, and since this is a "forum" project, let's do this with basic posts.

We'll do a very barebones kind of post. Think a "Tweet". Each post has nothing but the id of the user who posted, when he posted it, and the content of the post.

We'll use two indexes: one to keep track of posts by user id, and one to enable querying posts by hashtags, like the old Twitter.

We don't have proper sessions yet, so we'll make the username part of the data we submit, and we will not validate the authorization.

On the user list page, we'll turn each user to a link, when you click on it, it takes you to a page where you can post on that user's behalf. The page will have a list of recent posts by the user, and a textbox on the top to create a new post.

Then for each post, we'll extract hash tags, and create a link for each. When you click it, you see a page listing all posts that use this hashtag

We have basically two new pages, each one needs its own RPC to fetch data:

List latest posts by user id

Find posts by hash tag

We'll also need one procedure to create a post.

We'll start with the backend code first, because it's surprisingly straight forward.

First, define the post struct

type Post struct {

Id int

UserId int

CreatedAt time.Time

Content string

}We also define a method to extract hash tags from content. You can checkout the implementation from the codebase attached at the end of this article.

Now we define three vbolt objects:

A bucket to hold the posts themselves

An index to group posts by user id

An index to query posts by hash tag

var PostsBkt = vbolt.Bucket(&dbInfo, "posts", vpack.FInt, PackPost)

// UserPostsIdx term: user id. priority: timestamp. target: post id

var UserPostsIdx = vbolt.IndexExt(&dbInfo, "user-posts", vpack.FInt, vpack.UnixTimeKey, vpack.FInt)

// HashTagsIdx term: hashtag, priority: timestamp, term: post id

var HashTagsIdx = vbolt.IndexExt(&dbInfo, "hashtags", vpack.StringZ, vpack.UnixTimeKey, vpack.FInt)One way to think of an index is as a bidirectional multi-map. You want to use a query term to find matching targets. Thus we use the terminology:

Term: the search term you want to use to query for data

Target: the matching item that you're targeting for queries

It's a multi-map, because each term can have multiple matching targets.

It's bidirectional, because you can go from term to targets, or from target to terms.

Structurally, it's a collection of entries, sorted in ascending order, each entry is composed of three elements: (term, priority, target). For each such entry, there's a mirror entry with (target, term, priority).

We can think of an index as an accelerator structure to help us quickly find items matching a query term, or we can think of it as a way to group elements under a group key. If we choose to think of it as a collection, the grouping key would be the term.

(NOTE: I have recently introduced the concept of "collection" into vbolt, but it's still in flux, and it overlaps very much with Index, so it might go away, or the API might get merged with the Indexing API, so I choose for now to not talk about it much).

Now, when we create a new Post, we save it to the posts bucket, and we also add its entry in the user posts index and the hashtags index.

In both indexes, we use the creation timestamp as the priority index. This means when we iterate matching posts, they will be ordered by creation time.

Updating the index happens by setting all the terms for a target, along with their priorities. This is the basic concept, but we have a few different functions for doing that, depending on the use case:

We can set several terms, associating a different priority to each

We can set several terms, with the same priority

We also have helpers to set one term (so we don't have to create a slice when calling the function), and we can ignore the priority parameter (using the zero value).

Here's the actual code to write the data to the bucket and indexes:

vbolt.Write(ctx.Tx, PostsBkt, post.Id, &post)

vbolt.SetTargetSingleTermExt(

ctx.Tx, // transaction

UserPostsIdx, // index reference

post.Id, // target

post.CreatedAt, // priority

post.UserId, // term (single)

)

tags := ExtractHashTags(post.Content)

vbolt.SetTargetTermsUniform(

ctx.Tx, // transaction

HashTagsIdx, // index reference

post.Id, // target

tags, // terms (slice)

post.CreatedAt, // priority (same for all terms)

)I agree the API is not very clean or consistent; hopefully it will be cleaned up in a new future release.

Now, to find posts by user id, we do the following:

const Limit = 100

var window = vbolt.Window{Limit: Limit}

var postIds []int

vbolt.ReadTermTargets(

ctx.Tx, // the transaction

UserPostsIdx, // the index

req.UserId, // the query term

&postIds, // slice to store matching targets

window, // query windowing

)

vbolt.ReadSlice(ctx.Tx, PostsBkt, postIds, &resp.Posts)We read the matching post ids to a list of numbers, then use this list of numbers to read the list of posts to a slice of []Post.

Querying the index is basically the same:

var postIds []int

vbolt.ReadTermTargets(

ctx.Tx, // the transaction

HashTagsIdx, // the index

req.Hashtag, // the query term

&postIds, // slice to store matching targets

window, // query windowing

)

vbolt.ReadSlice(ctx.Tx, PostsBkt, postIds, &resp.Posts)This is all the code we need to index and query data.

There's no "SQL" layer that the code has to go through. There's no "ORM". We don't need an ORM. We don't need to create a "Repository" interface; vbolt is already a suitable programmatic interface, with reasonably designed building blocks. We don't need to mock the database for testing.

If we want to do some automated testing, we can write test code that uses a temporary file as the database, and then let it run the code. Then we can verify the inputs and outputs of the system as a whole.

In fact, let's do just that. (I was not going to do it until I wrote the above paragraph).

First we setup the temporary test database.

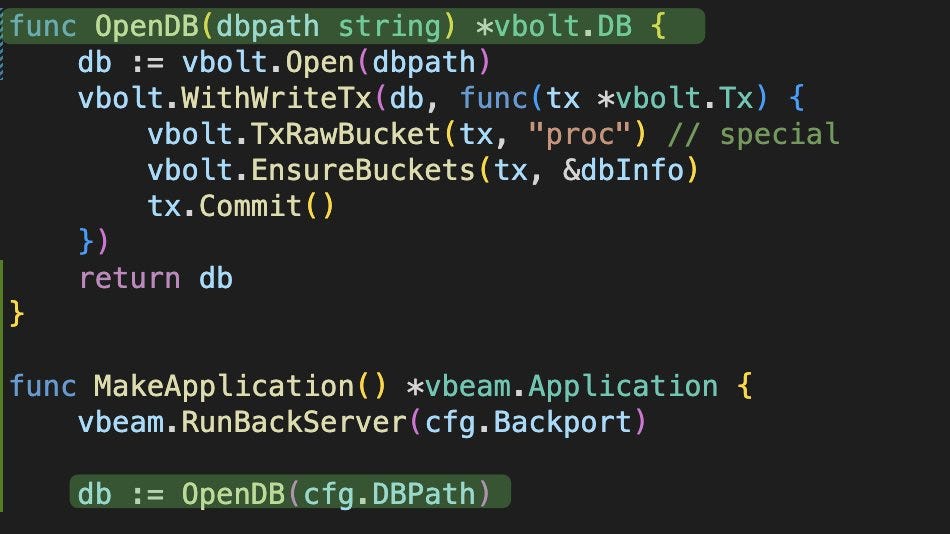

In case you are wondering what that OpenDB function is, I simply extracted the few lines we had inside MakeApplication

Back to the test, we specify a few test cases and a set of expected outputs:

Then we execute the inputs and check the outputs

We can run the tests, and they pass!!

Now, you may wonder if it's really executing the tests! OK, we can easily add some logging and run the test command with `-v`

Then the output will be:

Now that we have an automated test that confirms the code is working, we can start coding the frontend.

It should be pretty obvious what we need to do. There's nothing new here so I'm not sure how much explanation is required for the frontend code.

Just like last time, we define a route, we fetch data for said route, and we display the data.

We'll do that next episode, and we will add some basic session token management as well.

Hope you enjoyed this episode and found it useful.

Download the code: EP004.zip

View the code online: HandCraftedForum/tree/EP004