This is Episode 6 of HandCraftedForum.

In the previous episode (EP005), I pointed out that we have a problem with code duplication when it comes to fetching posts by hashtag and by userid.

This again was not one of the planned topics for the series but I think it's worth a dedicated episode.

We have two almost identical code paths that are only different in a couple of small details.

We have two features:

Find posts by hashtag

Find posts by userid

For each we use a special Index object. The hash tag index uses the hashtag (string) as the key, while the user posts index uses the userid (int) as the key.

Because the index object is different, we have two different RPCs to call:

PostsByHashtag

PostsByUser

They both return the same thing, but have different inputs.

The input for PostsByUser looks like this:

struct {

UserId int

Cursor []byte

}The input for PostsByHashtag looks like this:

struct {

Hashtag string

Cursor []byte

}As you can see, each has two parameters: cursor, and the input to the index. Each chooses to call the input by a different name, and of course, the input has a different type: for one it's a string, for the other it's an int.

The functions themselves are almost identical though. They only differ in the name of the input parameters, the index they use, and the query term they pass to the index.

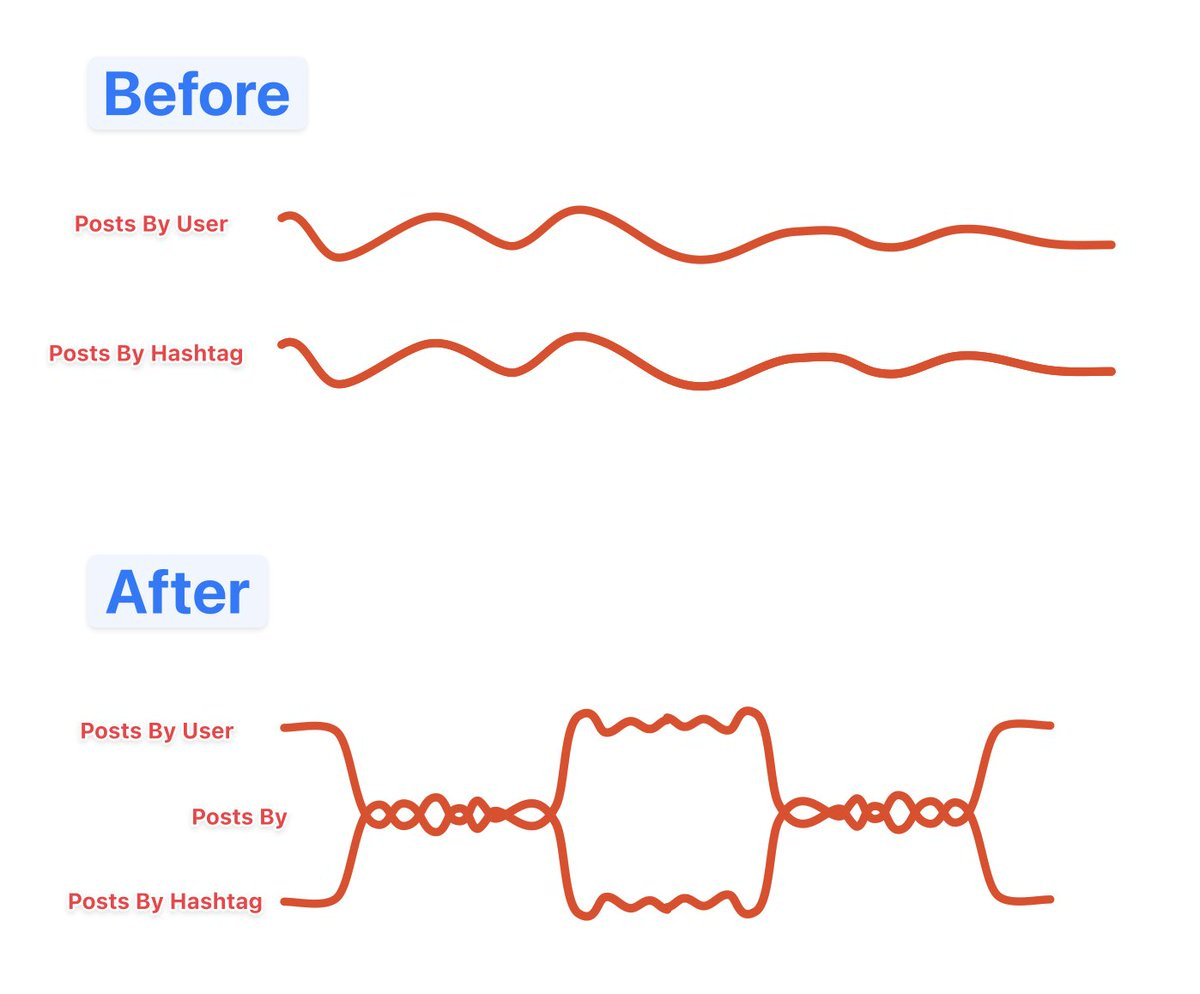

Now, this divergence on the server side will cause a divergence on the client side.

The frontend code has two divergent but almost identical blocks of code to implement the fetching of posts either by userid or by hashtag.

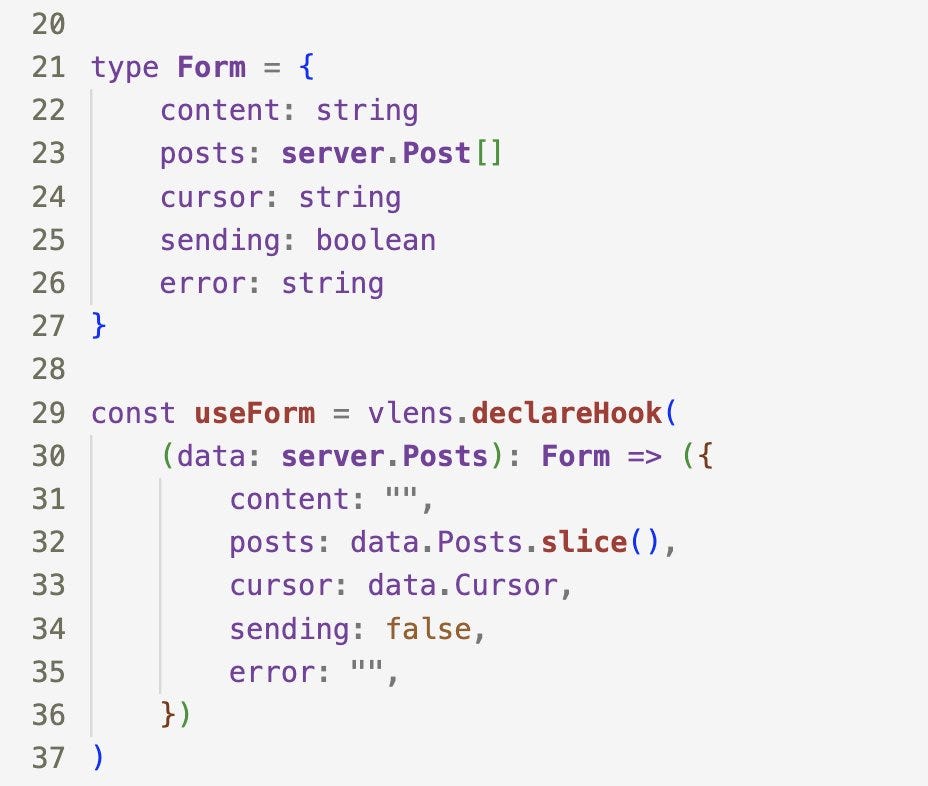

Fortunately, the response from both RPCs is the same, allowing us to use the same state object to track the post listing itself:

But the fetching of more posts is duplicated in two almost identical functions

The fragment where we display posts and show the "More" button is also almost identical but divergent

The starting point for this divergence was using two different index objects to answer each query, and each index using a different type for the query term. This resulted in two different server side procedures to perform the query, each takes a different request object that specifies the query term using a differently named field using a different type. This results in the client side having to implement "fetch more" in two separate functions, one for each backend procedure. Finally culminating in the post list and "more" button fragment to also diverge in two place, each one binding itself to its corresponding callback handler.

Naive approach

The naive approach is to notice the duplicate pattern, extract helper functions that take parameters with "holes" in them, and the caller fills the hole with the appropriate information.

In other words, create parameterized versions of all the above duplicated code fragments.

Something like this:

function viewPostsWithFetchMore<P>(form: Form, param: P, fetchFn: (p: P, form: Form) => any) {

return <>

{viewPosts(form.posts)}

{form.cursor && <button disabled={form.sending}

onClick={vlens.cachePartial(fetchFn, param, form)}>More</button>}

</>

}If you do this, the call sites can transform this way:

// Inside the hashtag search view function

viewPostsWithFetchMore(form, hashtag, fetchMoreByHashtag)

// Inside the user posts view function

viewPostsWithFetchMore(form, userId, fetchMoreUserPosts)Now, the process can be repeated for the fetch function itself. We extract the common bits to a function with holes, and we supply the holes

async function fetchMoreUserPosts(userId: number, form: Form) {

return fetchMoreFromServer(form, { UserId: userId, Cursor: form.cursor }, server.PostsByUser)

}

async function fetchMoreByHashtag(hashtag: string, form: Form) {

return fetchMoreFromServer(form, { Hashtag: hashtag, Cursor: form.cursor }, server.PostsByHashtag)

}

async function fetchMoreFromServer<P>(form: Form, params: P, remoteFn: (p: P) => Promise<rpc.Response<server.Posts>>, ) {

form.sending = true

let [resp, err] = await remoteFn(params)

form.sending = false

vlens.scheduleRedraw()

if (resp) {

form.posts.push(...resp.Posts)

form.cursor = resp.Cursor

} else {

form.error = err

}

}The same thing can be done on the server side: extract the common parts of the duplicated code into a function that takes parameters for the different parts that you fill at the call site with the appropriate values:

type ByUserReq struct {

UserId int

Cursor []byte

}

func PostsByUser(ctx *vbeam.Context, req ByUserReq) (resp Posts, err error) {

return PostsBy(ctx, req.Cursor, req.UserId, UserPostsIdx)

}

type ByHashtagReq struct {

Hashtag string

Cursor []byte

}

func PostsByHashtag(ctx *vbeam.Context, req ByHashtagReq) (resp Posts, err error) {

return PostsBy(ctx, req.Cursor, req.Hashtag, HashTagsIdx)

}

const Limit = 2

func PostsBy[T comparable](ctx *vbeam.Context, cursor []byte, term T, index *vbolt.IndexInfo[int, T, time.Time]) (resp Posts, err error) {

var window = vbolt.Window{

Limit: Limit,

Direction: vbolt.IterateReverse,

Cursor: cursor,

}

var postIds []int

resp.Cursor = vbolt.ReadTermTargets(

ctx.Tx, // the transaction

index, // the index

term, // the query term

&postIds, // slice to store matching targets

window, // query windowing

)

vbolt.ReadSlice(ctx.Tx, PostsBkt, postIds, &resp.Posts)

generic.EnsureSliceNotNil(&resp.Posts)

generic.EnsureSliceNotNil(&resp.Cursor)

return

}You could go even crazier with the object oriented approach, create a base class and inherit into sub classes. I hate to even think about what that would look like.

Anyway, this approach, of extracting common code parts into a function with holes, is not very good actually. On one hand, yes, we are reducing code duplication, but on the other hand, we are creating new concepts, and now, someone trying to read the code has to understand why we have these generic higher order functions that take other functions and parameters as inputs.

Before, we had two parallel code paths. After, we still effectively have two code paths, but they are heavily intertwined.

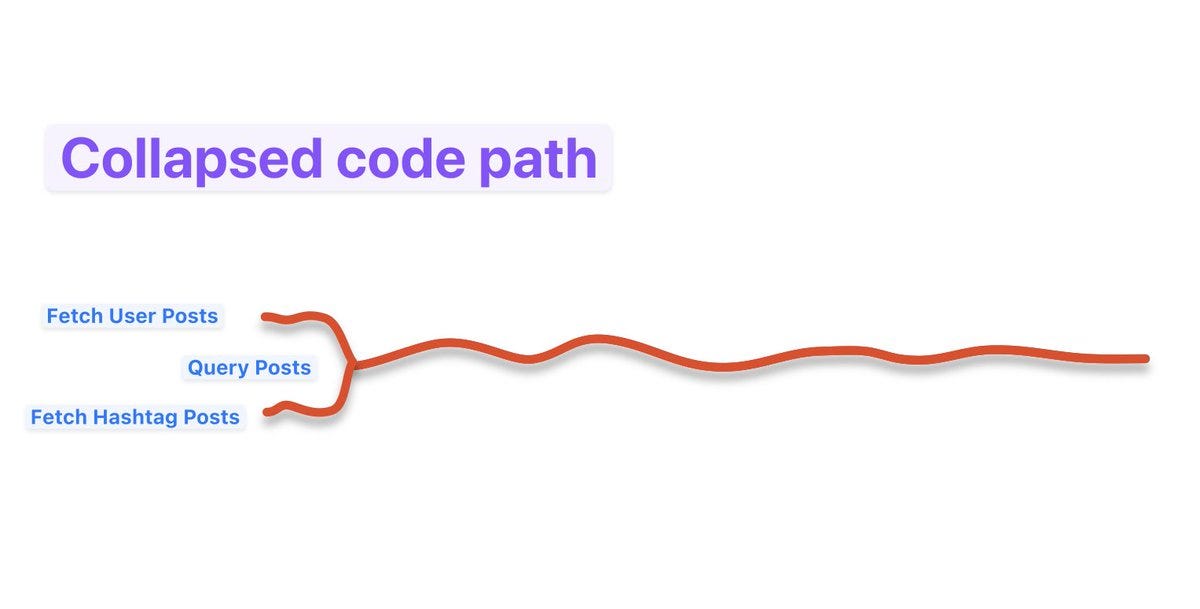

Simple approach

We can notice the reason for the code paths divergence is us having two different index objects, using a different type for the query term.

This can all go away if we just use the same index for both types of queries. After all, there's nothing special about querying by user id that makes it different from querying by hashtag. The fact that user ids are numeric is largely irrelevant, because we will serialize both to a byte buffer before we pass it to the index. The only thing we need to worry about is avoiding overlap between the two types of queries, and this is trivial to achieve by using a prefix. This allows us to use different types of prefixes for different types of queries that we will add in the future.

For instance, we can use '#' for hashtags and '@' for user ids, or we can use 't:' for hashtags and 'u:' for user ids. It does not really matter what we use. It just matters that we are consistent.

So, we start by unifying the two index objects into one:

Then, when it comes time to create the post and update the index, we combine the tags terms and the user term into one list and set them on the new index:

terms := make([]string, 0, len(post.Tags)+1)

generic.Append(&terms, fmt.Sprintf("u:%d", post.UserId))

for _, tag := range post.Tags {

generic.Append(&terms, "t:"+tag)

}

priority := post.CreatedAt

vbolt.SetTargetTermsUniform(

ctx.Tx, // transaction

PostsIdx, // index reference

post.Id, // target

terms, // terms (slice)

priority, // priority (same for all terms)

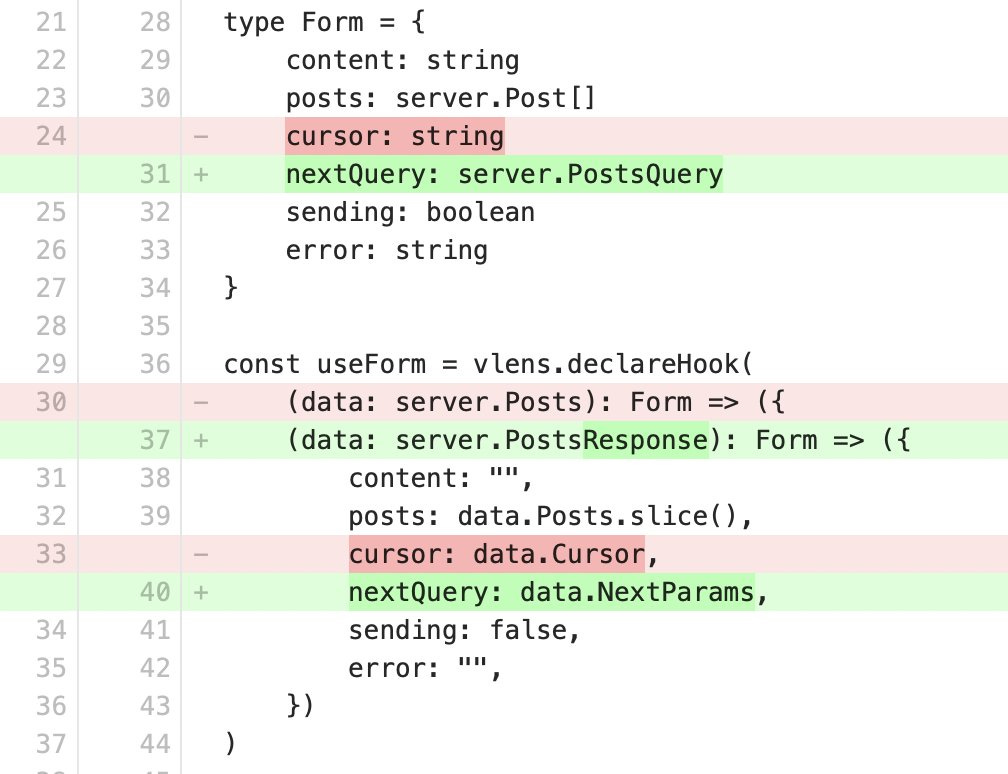

)Since we want to collapse the code paths, we also update the querying procedure, along with its inputs and outputs, in a way that helps the client code be more uniform.

type PostsQuery struct {

Query string

Cursor []byte

}

type PostsResponse struct {

Posts []Post

NextParams PostsQuery

}Now the posts query is basically the same as the previous two functions, except it does not care at all what type of terms you're looking for: the operation is all the same.

func QueryPosts(ctx *vbeam.Context, req PostsQuery) (resp PostsResponse, err error) {

var window = vbolt.Window{

Limit: Limit,

Direction: vbolt.IterateReverse,

Cursor: req.Cursor,

}

var postIds []int

resp.NextParams = req

resp.NextParams.Cursor = vbolt.ReadTermTargets(

ctx.Tx, // the transaction

PostsIdx, // the index

req.Query, // the query term

&postIds, // slice to store matching targets

window, // query windowing

)

vbolt.ReadSlice(ctx.Tx, PostsBkt, postIds, &resp.Posts)

generic.EnsureSliceNotNil(&resp.Posts)

generic.EnsureSliceNotNil(&resp.NextParams.Cursor)

return

}Next, we update the client side code to use 't:' and 'u:' prefixes on initial page load

export async function fetchUserPosts(route: string, prefix: string) {

const params = vlens.urlParams(route);

const userId = vlens.intParam(params, "user_id", 0);

return server.QueryPosts({ Query: 'u:' + userId, Cursor: "" })

}

export async function fetchByHashtag(route: string, prefix: string) {

const params = vlens.urlParams(route);

const hashtag = params.get("hashtag") ?? "";

return server.QueryPosts({ Query: 't:' + hashtag, Cursor: "" });

}Now because the response contains `NextParams`, the client side "fetch more" can be really simple. It doesn't need to care about the search type, or what page we are currently on.

It just stores the NextParams on initialization, and keeps storing it after every "fetch more" operation

The callback function for the "More" button can be really simple now

The fragment to display the list of posts followed by the "More" button is now also greatly simplified.

Now we've not only eliminated duplicate code; we've collapsed the code paths. The divergent part is very small: just the fetching function for different pages: fetchUserPosts and fetchHashtagPosts. Everything else after that is uniform.

This way of collapsing code paths is really powerful but it is hardly taught anywhere. It allows us to add more features without adding any complication.

Suppose, for instance, that we wanted to also index posts by year and year-month combinations. With this approach to collapsing code paths, we can easily just add more terms this way:

generic.Append(&terms, fmt.Sprintf("y:%d", post.CreatedAt.Year()))

generic.Append(&terms, fmt.Sprintf("m:%s", post.CreatedAt.Format("2006.01")))Where as, if we had taken the naive approach described above, where we painstakingly create generic functions with holes to be filled in by the caller, it would be more complicated: we'd have to create a new index (or two), new functions to query by dates, new "fetch more" client side functions to query by dates, etc. We'd just have a lot more boilerplate code to write. Which means increased friction towards anything we want to do to improve the querying function.

For example, we might consider supporting queries by "range". Since the Index is backed by a B-Tree, it's not difficult to see how we can query by a range of query terms, for example from 'm:2024.05' to 'm:2024.10'.

We can, for example, change the PostsQuery to be more like this:

We'd interpret `To` being unset to mean it's not a query range but a normal query. And because the response object will include the `NextParams`, the client code for "fetch more" does not have to change at all.

Having just one index object and one function to query it, means we can add features of this type without a lot of trouble.

Had we kept around many index objects and many querying functions, it would be a lot more effort to support this for all of them. We'd have more functions to change, more "query input" types to change, and more UI code to change.

Next, imagine if we wanted to support querying by multiple terms, some of which could be a range, and some of which would be just normal terms. For example, let's say we combine 'u:2' with 'm:2024.12' to get all the posts by the given user in the given month.

Again, we'd just have one type to change and one function to update. The input parameters could be change to look like this:

type QueryTerm struct {

From string

To string

}

type PostsQuery struct {

Terms []QueryTerm

Cursor []byte

}The function logic would be somewhat involved, but it would be tractable, because it only deals with one index.

Had we gone the naive approach and kept several index objects and several query param structs for each query type, it would be a lot more complicated. It would probably take a Herculean effort to to implement a function that lets you query by multiple query terms, because each query term would hit a different index, and you'd have to keep track of the type of each query parameter and the index associated with it.

Maybe something crazy like this:

type HashtagQuery struct {

Hashtag string

}

type ByUserQuery struct {

UserId int

}

type ByDateQuery struct {

From time.Time

To *time.Time

}

type PostQuery interface {

IsPostQuery() bool

CountItems(tx *bolt.Tx) int

PerformQuery(tx *bolt.Tx, cursor []byte, limit int) []int

// TODO: add whatever other methods are needed

// for the implementation of QueryPosts to work

// with a list of PostQuery items

}

// TODO: implement PostQuery for HashtagQuery, ByUserQuery, ByDateQuery

func ParseQueryString(query string) []PostQuery {

// somehow parse a complex query string into a list

// of query items that implement the interface

}

func QueryPosts(tx *vbolt.Tx, query []PostQuery) []Post {

// perform the query

}While this example is hypothetical, it's not mere speculation.

This is actually the kind of programming that happens in the wild. This is what senior engineers in most web companies spend their time doing. A team of ~6 engineers would spend two~three months to make this kind of query work.

As a side note: the interface PostQuery exists as a placeholder (a template with holes to be filled), and the content of the interface depends on the implementation details of QueryPosts as it attempts to combine multiple queries. I put `CountItems` in it because in my head I imagine it as part of the implementation, but maybe what we would need is something else.

Now, I'm not saying that implementing "query by multiple query terms" would otherwise be easy. It might be easy or it might be difficult. But either way, implementing it on one index object with one unified query type, is for sure going to be an order of magnitude simpler than implementing it on multiple indexes and multiple types of queries.

With our uniform structure, we only have to solve the basic problem: if we can do it on one index with one type of query term, we can trivially do it for all type combinations.

Where as, with the naive approach, we first have to figure out how to combine multiple query terms for one index, and then after we implement it and test it, we have to figure out how to generalize it to querying multiple indexes.

Should we actually try to implement this? I haven't thought about this problem that deeply, but since it's an interesting challenge, I'll give it some thought. We'll need to have a good deal of sample posts to work with, because it'd be difficult to assess the effectiveness of our implementation when we only have ~20 posts or so.

Originally my plan was to delay the implementation of "Search" as far back as possible, but something is urging me to focus on it sooner. Maybe I'll try it for the next episode! No promises, but stay tuned!

Migration

We changed how the index stores and queries data, but the data we've already saved and indexed is not using this new index, so if we just run the local server now and try to fetch posts by users or by hashtags, we'd see nothing, because the index has no data.

We have two ways to solve this:

(1) We can delete the database file and let the server create a new one when it starts up. At this stage we don't have a lot of data, so we can just manually recreate some fake sample data like we did before.

(2) We can run a migration process on startup to re-index all the existing posts.

I chose the latter because I have a system for it. I add some code to the OpenDB function to "apply db processes". I give each migration/process a unique label prefixed with 'year-monthday-'. The migration system uses the label to ensure the migration process is only ever executed once on the database, so this function is safe to keep around for a long time. I'll try to cover the migration system a bit more in depth at a later time.

vbolt.ApplyDBProcess(db, "2024-1217-unify-post-index", func() {

// delete old indexes

vbolt.WithWriteTx(db, func(tx *vbolt.Tx) {

tx.DeleteBucket([]byte("user-posts"))

tx.DeleteBucket([]byte("hashtags"))

tx.Commit()

})

log.Println("Deleted old indexes")

// populate the new index

vbolt.TxWriteBatches(db, PostsBkt, BatchSize, func(tx *vbolt.Tx, batch []Post) {

for _, post := range batch {

UpdatePostIndex(tx, post)

}

vbolt.TxCommit(tx)

})

})Demo

With that, here's a demo of the application after our changes. You can see from the network tab that we are using the new unified procedure.

Note: in the process of making this work, I had to fix a few bugs in vbolt. Make sure to run `go mod download` to pull in the dependencies.

Download the code: EP006.zip

View the code online: HandCraftedForum/tree/EP006